Learning a Predictable and Generative

Vector Representation for Objects

People

Paper

|

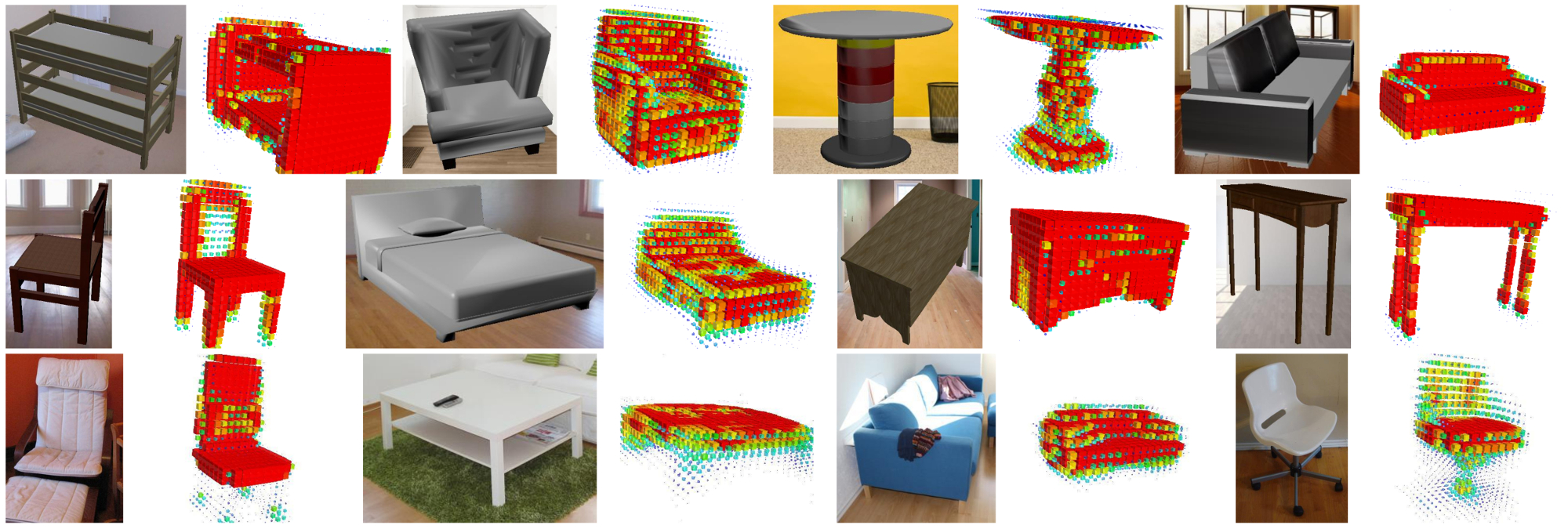

R. Girdhar, D. F. Fouhey, M. Rodriguez and A. Gupta Learning a Predictable and Generative Vector Representation for Objects Proc. of European Conference on Computer Vision (ECCV), 2016 [PDF] [arXiv] [code] [models] [Supplementary] [Talk Video] [BibTex] |

Acknowledgements

This work was partially supported by Siebel Scholarship to RG, NDSEG Fellowship to DF and Bosch Young Faculty Fellowship to AG. This material is based on research partially sponsored by ONR MURI N000141010934, ONR MURI N000141612007, NSF1320083 and a gift from Google. The authors would like to thank Yahoo! and Nvidia for the compute cluster and GPU donations respectively. The authors would also like to thank Martial Hebert and Xiaolong Wang for many helpful discussions.